|

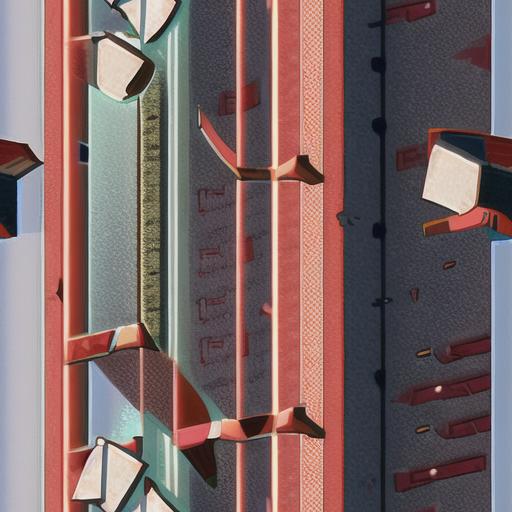

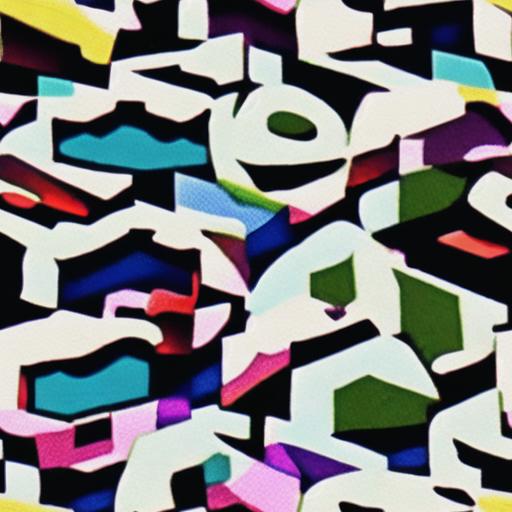

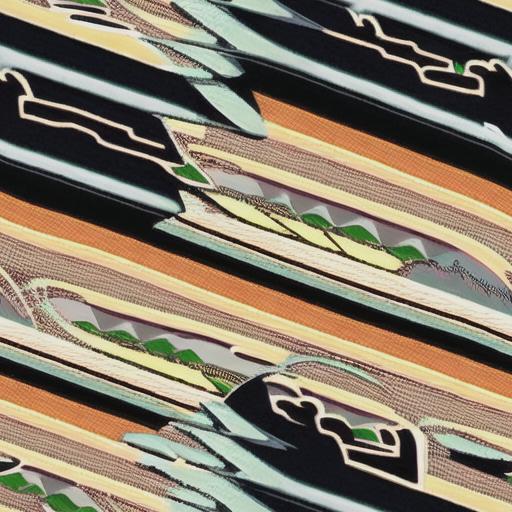

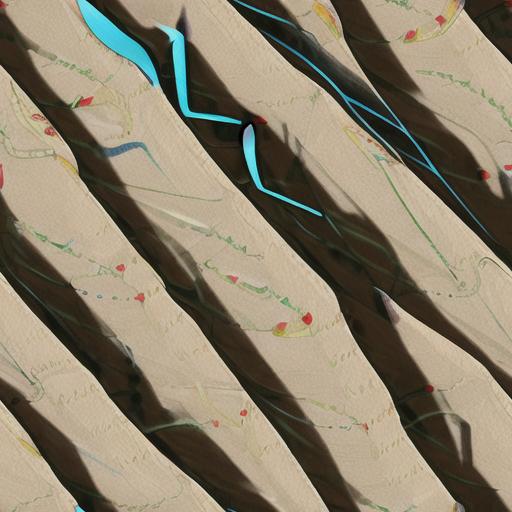

The past couple months I've been working on larger-scale LDM training (you can, at least for now, build a Midjourney at home if you really want to; it's not that big a deal.) At this point I've written like 70,000 words in my diary about a half dozen experiments with it, and generated mountains of product ranging from replicative to somewhere just south of sublime, but I can summarize most of this as: (1) oh boy!, (2) oh no!, (3) Jesus, that's fantastic! We're getting the technicolor dreamcoat cyberdystopia, at least. I'll try and ease you into this gently, because, well, I've repaved the U-Net and completely rebuilt the VAE decoder and taught the thing only a thousand new concepts or so and I've made a couple of mistakes, this is just v1, but it's awesome and quite capable of melting your face off. Hopefully not literally -- well, maybe literally. Accordingly, I'm going to mostly share some of the most unthreatening, feel-good fluff possible. Mostly. We're going to have FUN with this stuff while we can. You feeling it? The short explanation for these is I've hardwired into my LDM a set of aesthetic switches useful for 2-D illustration, by annotating the training set (a large conglomeration of cGAN training sets I had constructed previously, getting enriched with all sorts of strange and wonderful and unlikely things) appropriately. Some of these switches mean specific things: 'a finely constructed figure with confident lines', 'a sculpted feel, this looks as if it belongs in three-dimensional space', 'correct hands in one of many specfic poses', 'a low resolution or grainy image', 'well-composed', 'an especially instructive example', etc. Some of these switches mean less concrete things: there are switches for moods, expressions, 'vibes', even je ne sais quoi: ineffable things, concepts that I cannot necessarily express concisely in words, but I wouldn't mind if the model learned them. You can get quite abstract with the concepts you impress upon your model: the current generation of LDMs are impressively good low-shot learners. If you can identify something when you see it, you can teach it to your model, and from there it's the slave of the lamp. Then there's the aesthetic preference switches. These are magic, special, maybe a little dangerous. These are legitimately fire, but they're also the thing that can turn a Geordi LaForge into a Reginald Barclay, if you're a big enough dork to understand that reference. I can explain how I make these, but you'll wonder what planet I came from if I tell you, and there's a small but non-zero chance it'll all be banned by international treaty at some point anyway, so no matter. It's not automated aesthetic scoring, and I'm not paying people in Kenya $2/hour to do it (OpenAI leaking us a little of the near future of human work, hooray!), but a third, better, thing. Suffice it to say that these switches represent an embedded distillation of my own peculiar tastes, extracted from my imagination in an ethical but cloud-cuckoo manner. This is a very early cut of it -- this is only the first finetune I've done with any sort of aesthetic preference built in (i.e. not added with textual inversion/Dreambooth finetune), and when v1 figured out how the hell it worked (somewhere around 1.6 million training steps) and I could see it in the generations I whooped for joy. Not sure if it's at all compelling to anybody else -- I mean, it's made by me for me -- but who cares really? It legit does what I expected it to do, it makes pictures the way I want to see them, to my specific tastes, whims, and values. Like the Hashshashins in legend, with algorithmic perfection, they lead me to see the gardens of Allah. (Then, I either join them or die. Maybe the aptest metaphor for AI?) Plus, I can just decree things like every Tesla vehicle be rendered on fire, and so shall they be. So what are we going to make with this infernal contraption -- recipe: one part John von Neumann, one part George Herriman, one part B.F. Skinner -- today? How about some abstract textures? Nice and unthreatening and safe. They come with toroidal symmetry, so you can tile them vertically and horizontally. You can use them as wallpaper, wrapping paper, as the background for your Geocities page, as a texture in your indy 2-D/3-D game, to print out on toilet paper and wipe your butt with AI art, whatever. There's something for everybody in my bag o' toys! The only conditioning used for these images are the quality switches: the 'high decile preference' switch is on (i.e. that's what our diffuser is working toward), and instead of using Gaussian noise for the unguided conditioning in diffusion, we fuzz our latent with the embedding for the 'bottom decile preference' switch (i.e. this is what our diffuser is working away from). There is no subject, there is no 'prompt' per se -- I just told it to match my "good" preference as closely as it can, while avoiding the "bad" stuff.

Sotto voce: Of course, you can do this in reverse as well, guide toward bad generations and away from good generations, but I'm not going to subject you to any of that output. Really, I'm not. It's not recommended. Most of the batch of 24 images pessimized in this manner that I produced were only extremely crummy, but then there was Image Number Three, the only still image I've ever seen that deserves a seizure warning. So, no, not a good idea; in fact, fuck doing that forever. (Isn't it great/awful? It's like having a CIA torture device in your living room!) I also changed the topology of the model -- basically just overrode the sampling functions so they work on a torus instead of a plane: this is what forces it to produce images that are tileable both vertically and horizontally. The combination of conditioning only on quality switches and rendering on a torus results consistently in abstract patterns; without the guided conditioning moving the image toward a specific thing, it's far easier to tile that way, so that's what you get normally (although occasionally the designs are or are suggestive of objects). The aesthetic embedding guides it toward symmetry, appealing (to me) choices of color, and something approaching at times coherence and confidence. The sense/illusion of confidence is important: even if isn't drawing a picture of anything in particular, it should at least appear that it's making a statement. These are interesting because, while they're pictures of nothing specific and they are among the simplest and gentlest images this model can create, they are biased strongly toward my aesthetic preference embedding, and despite barely starting, despite only having labeled a quarter of the training set sufficiently to even deduce aesthetic preference... well, it works. These came from a place in me that no words, emotion, or love can ever touch, but floating point arithmetic can approximate to high fidelity, apparently: | |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|